| Name: | Jiapeng Tang |

|---|---|

| Position: | Ph.D Candidate |

| E-Mail: | jiapeng.tang@tum.de |

| Phone: | +49-89-289-18489 |

| Room No: | 02.07.034 |

Bio

Jiapeng Tang is a Ph.D. student at the Visual Computing Lab advised by Prof. Matthias Nießner. His research focuses on understanding and reconstructing dynamic 3D environments. He received his Bachelor and Master degrees in Electronic Engineering at South China University of Technology. Homepage

Research Interest

3D Reconstruction, Non-rigid reconstruction and Tracking, Scene UnderstandingPublications

2025

| ROGR: Relightable 3D Objects using Generative Relighting |

|---|

| Jiapeng Tang, Matthew Levine, Dor Verbin, Stephan Garbin, Matthias Nießner, Ricardo Martin-Brualla, Pratul P. Srinivasan, Philipp Henzler |

| NeurIPS 2025 Spotlight |

| Given an image collection under unknown lighting, ROGR reconstructs a relightable neural radiance field, that can be rendered under any novel environment map without further optimization, on-the-fly relighting and novel view synthesis. |

| [code][bibtex][project page] |

| SHeaP: Self-Supervised Head Geometry Predictor Learned via 2D Gaussians |

|---|

| Liam Schoneveld, Zhe Chen, Davide Davoli, Jiapeng Tang, Saimon Terazawa, Ko Nishino, Matthias Nießner |

| ICCV 2025 |

| SHeaP learns a state-of-the-art, real-time head geometry predictor through self-supervised learning on only 2D videos. The key idea is to use 2D Gaussian Splatting instead of mesh rasterization when computing the photometric reconstruction loss. |

| [video][bibtex][project page] |

| GAF: Gaussian Avatar Reconstruction from Monocular Videos via Multi-view Diffusion |

|---|

| Jiapeng Tang, Davide Davoli, Tobias Kirschstein, Liam Schoneveld, Matthias Nießner |

| CVPR 2025 |

| Given a short, monocular video captured by a commodity device such as a smartphone, GAF reconstructs a 3D Gaussian head avatar, which can be re-animated and rendered into photo-realistic novel views. Our key idea is to distill the reconstruction constraints from a multi-view head diffusion model in order to extrapolate to unobserved views and expressions. |

| [video][code][bibtex][project page] |

2024

| GGHead: Fast and Generalizable 3D Gaussian Heads |

|---|

| Tobias Kirschstein, Simon Giebenhain, Jiapeng Tang, Markos Georgopoulos, Matthias Nießner |

| SIGGRAPH Asia 2024 |

| GGHead generates photo-realistic 3D heads and renders them at 1k resolution in real-time. Thanks to the efficiency of 3D Gaussian Splatting, no 2D super-resolution network is needed anymore which hampered the view-consistency of prior work. We adopt a 3D GAN formulation which allows training GGHead solely from 2D image datasets. |

| [video][code][bibtex][project page] |

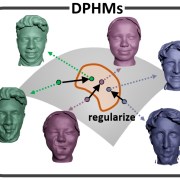

| DPHMs: Diffusion Parametric Head Models for Depth-based Tracking |

|---|

| Jiapeng Tang, Angela Dai, Yinyu Nie, Lev Markhasin, Justus Thies, Matthias Nießner |

| CVPR 2024 |

| We introduce Diffusion Parametric Head Models (DPHMs), a generative model that enables robust volumetric head reconstruction and tracking from monocular depth sequences. Tracking and reconstructing heads from real-world single-view depth sequences is very challenging, as the fitting to partial and noisy observations is underconstrained. To tackle these challenges, we propose a latent diffusion-based prior to regularize volumetric head reconstruction and tracking. This prior-based regularizer effectively constrains the identity and expression codes to lie on the underlying latent manifold which represents plausible head shapes. |

| [video][code][bibtex][project page] |

| DiffuScene: Denoising Diffusion Models for Generative Indoor Scene Synthesis |

|---|

| Jiapeng Tang, Yinyu Nie, Lev Markhasin, Angela Dai, Justus Thies, Matthias Nießner |

| CVPR 2024 |

| We present DiffuScene for indoor 3D scene synthesis based on a novel scene configuration denoising diffusion model. It generates 3D instance properties stored in an unordered object set and retrieves the most similar geometry for each object configuration, which is characterized as a concatenation of different attributes, including location, size, orientation, semantics, and geometry features. We introduce a diffusion network to synthesize a collection of 3D indoor objects by denoising a set of unordered object attributes. |

| [video][code][bibtex][project page] |

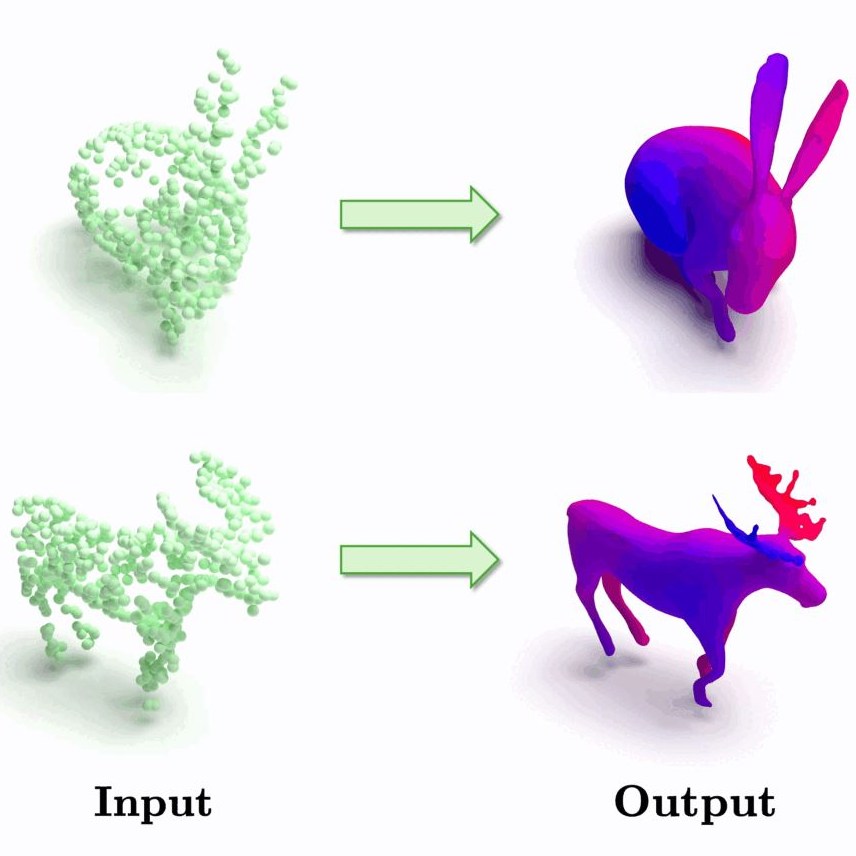

| Motion2VecSets: 4D Latent Vector Set Diffusion for Non-rigid Shape Reconstruction and Tracking |

|---|

| Wei Cao, Chang Luo, Biao Zhang, Matthias Nießner, Jiapeng Tang |

| CVPR 2024 |

| We introduce Motion2VecSets, a 4D diffusion model for dynamic surface reconstruction from point cloud sequences. We introduce a diffusion model that explicitly learns the shape and motion distribution of non-rigid objects through an iterative denoising process of compressed latent representations. We parameterize 4D dynamics with latent vector sets instead of using a global latent. This novel 4D representation allows us to learn local surface shape and deformation patterns, leading to more accurate non-linear motion capture and significantly improving generalizability to unseen motions and identities. |

| [video][bibtex][project page] |

2023

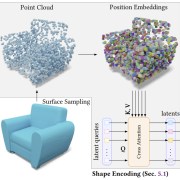

| 3DShape2VecSet: A 3D Shape Representation for Neural Fields and Generative Diffusion Models |

|---|

| Biao Zhang, Jiapeng Tang, Matthias Nießner, Peter Wonka |

| SIGGRAPH'23 |

| We introduce 3DShape2VecSet, a novel shape representation for neural fields designed for generative diffusion models. Our new representation encodes neural fields on top of a set of vectors. |

| [video][code][bibtex][project page] |

2022

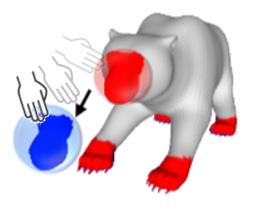

| Neural Shape Deformation Priors |

|---|

| Jiapeng Tang, Lev Markhasin, Bi Wang, Justus Thies, Matthias Nießner |

| NeurIPS 2022 |

| We present Neural Shape Deformation Priors, a novel method for shape manipulation that predicts mesh deformations of non-rigid objects from user-provided handle movements. We learn the geometry-aware deformation behavior from a large-scale dataset containing a diverse set of non-rigid deformations. We introduce transformer-based deformation networks that represent a shape deformation as a composition of local surface deformations. |

| [video][code][bibtex][project page] |