Incremental Dense Semantic Stereo Fusion for Large-Scale Semantic Scene Reconstruction

1Stanford University 2University of Oxford 3Technical University of Munich 4Microsoft Research 5Technicolor R&I

IEEE International Conference on Robotics and Automation (ICRA)

Abstract

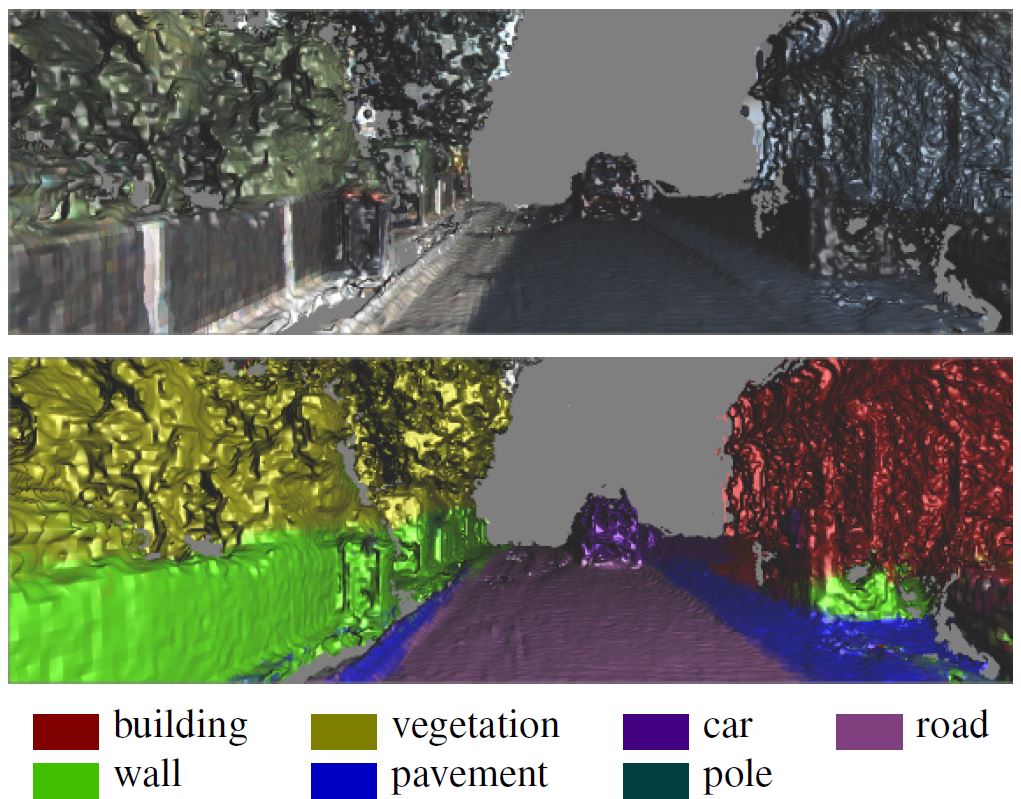

Our abilities in scene understanding, which allow us to perceive the 3D structure of our surroundings and intuitively recognize the objects we see, are things that we largely take for granted, but for robots, the task of understanding large scenes quickly remains extremely challenging. Recently, scene understanding approaches based on 3D reconstruction and semantic segmentation have become popular, but existing methods either do not scale, fail outdoors, provide only sparse reconstructions or are rather slow. In this paper, we build on a recent hash-based technique for large-scale fusion and an efficient mean-field inference algorithm for densely-connected CRFs to present what to our knowledge is the first system that can perform dense, large-scale, outdoor semantic reconstruction of a scene in (near) real time. We also present a ‘semantic fusion’ approach that allows us to handle dynamic objects more effectively than previous approaches. We demonstrate the effectiveness of our approach on the KITTI dataset, and provide qualitative and quantitative results showing high-quality dense reconstruction and labeling of a number of scenes.

Bibtex

Bibtex