| Name: | Manuel Dahnert |

|---|---|

| Position: | Ph.D Candidate |

| E-Mail: | manuel.dahnert@tum.de |

| Phone: | +49-89-289-18165 |

| Room No: | 02.07.034 |

Bio

I am a PhD student at the Visual Computing Lab with the focus on 3D scene understanding, representation & generation. I did my Master's Thesis in the same group about Transfer Learning between Synthetic and Real Data. This thesis concluded the Informatics: Games Engineering program (M.Sc) with specializations on Computer Graphics and Animation and Hardware-aware Programming. In 2015, I received my Bachelor's degree (B.Sc) Informatics: Games Engineering from the Technical University of Munich (TUM). From August 2016 until June 2017, I took part in the Erasmus mobility program, in which I was studying at Chalmers University of Technology, Sweden. In spring 2019, I spent three months at Stanford University visiting Geometric Computation group of Prof. Leonidas Guibas.

For more information, please visit my webpage.

Research Interest

3D Scene Understanding, 3D Reconstruction, Shape Analysis, Generative ModelingPublications

2024

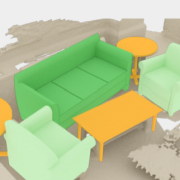

| Coherent 3D Scene Diffusion From a Single RGB Image |

|---|

| Manuel Dahnert, Angela Dai, Norman Müller, Matthias Nießner |

| NeurIPS 2024 |

| We propose a novel diffusion-based method for 3D scene reconstruction from a single RGB image, leveraging an image-conditioned 3D scene diffusion model to denoise object poses and geometries. By learning a generative scene prior that captures inter-object relationships, our approach ensures consistent and accurate reconstructions. |

| [bibtex][project page] |

2021

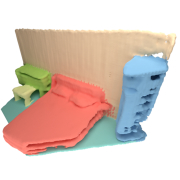

| Panoptic 3D Scene Reconstruction From a Single RGB Image |

|---|

| Manuel Dahnert, Ji Hou, Matthias Nießner, Angela Dai |

| NeurIPS 2021 |

| Panoptic 3D Scene Reconstruction combines the tasks of 3D reconstruction, semantic segmentation and instance segmentation. From a single RGB image we predict 2D information and lift these into a sparse volumetric 3D grid, where we predict geometry, semantic labels and 3D instance labels. |

| [video][code][bibtex][project page] |

2019

| Joint Embedding of 3D Scan and CAD Objects |

|---|

| Manuel Dahnert, Angela Dai, Leonidas Guibas, Matthias Nießner |

| ICCV 2019 |

| In this paper, we address the problem of cross-domain retrieval between partial, incomplete 3D scan objects and complete CAD models. To this end, we learn a joint embedding where semantically similar objects from both domains lie close together regardless of low-level differences, such as clutter or noise. To enable fine-grained evaluation of scan-CAD model retrieval we additionally present a new dataset of scan-CAD object similarity annotations. |

| [video][bibtex][project page] |

| Scan2CAD: Learning CAD Model Alignment in RGB-D Scans |

|---|

| Armen Avetisyan, Manuel Dahnert, Angela Dai, Angel X. Chang, Manolis Savva, Matthias Nießner |

| CVPR 2019 (Oral) |

| We present Scan2CAD, a novel data-driven method that learns to align clean 3D CAD models from a shape database to the noisy and incomplete geometry of a commodity RGB-D scan. |

| [video][code][bibtex][project page] |