| Name: | Barbara Rössle |

|---|---|

| Position: | Ph.D Candidate |

| E-Mail: | barbara.roessle@tum.de |

| Phone: | +49 (89) 289 - 18164 |

| Room No: | 02.07.038 |

Bio

I am a PhD candidate in the Visual Computing Lab since 2021. My interest is in 3D reconstruction and neural rendering. From 2016-2021, I was developing software for autonomous driving at BMW, focusing on localization and sensor fusion. I studied computer science (M.Sc.) and electrical engineering (B.Eng.) at the Universities of Applied Sciences in Ulm and Esslingen. My master thesis was on vehicle localization in 6 degrees of freedom for augmented reality. During my studies, I was active in a RoboCup Team and I spent a semester at Hacettepe University in Ankara, Turkey, as part of the Erasmus program. HomepageResearch Interest

Neural rendering, 3D reconstruction, novel view synthesisPublications

2024

| L3DG: Latent 3D Gaussian Diffusion |

|---|

| Barbara Rössle, Norman Müller, Lorenzo Porzi, Samuel Rota Bulò, Peter Kontschieder, Angela Dai, Matthias Nießner |

| SIGGRAPH Asia 2024 |

| L3DG proposes generative modeling of 3D Gaussians using a learned latent space. This substantially reduces the complexity of the costly diffusion generation process, allowing higher detail on object-level generation, and scalability to room-scale scenes. |

| [video][bibtex][project page] |

2023

| GANeRF: Leveraging Discriminators to Optimize Neural Radiance Fields |

|---|

| Barbara Rössle, Norman Müller, Lorenzo Porzi, Samuel Rota Bulò, Peter Kontschieder, Matthias Nießner |

| SIGGRAPH Asia 2023 |

| GANeRF proposes an adversarial formulation whose gradients provide feedback for a 3D-consistent neural radiance field representation. This introduces additional constraints that enable more realistic novel view synthesis. |

| [video][bibtex][project page] |

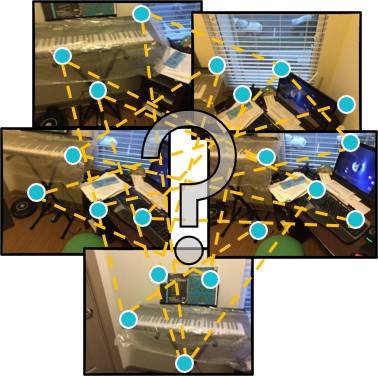

| End2End Multi-View Feature Matching with Differentiable Pose Optimization |

|---|

| Barbara Rössle, Matthias Nießner |

| ICCV 2023 |

| End2End Multi-View Feature Matching connects feature matching and pose optimization in an end-to-end trainable approach that enables matches and confidences to be informed by the pose estimation objective. We introduce GNN-based multi-view matching to predict matches and confidences tailored to a differentiable pose solver, which significantly improves pose estimation performance. |

| [video][bibtex][project page] |

2022

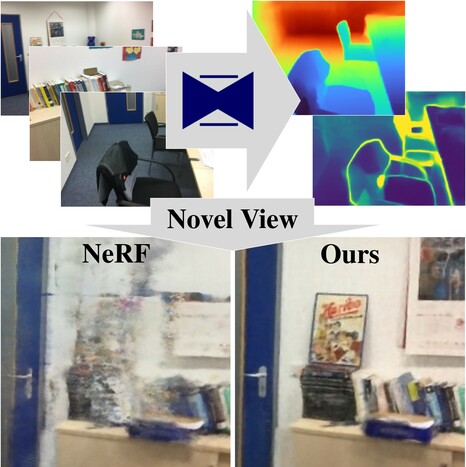

| Dense Depth Priors for Neural Radiance Fields from Sparse Input Views |

|---|

| Barbara Rössle, Jonathan T. Barron, Ben Mildenhall, Pratul P. Srinivasan, Matthias Nießner |

| CVPR 2022 |

| We leverage dense depth priors for recovering neural radiance fields (NeRF) of complete rooms when only a handful of input images are available. First, we take advantage of the sparse depth that is freely available from the structure from motion preprocessing. Second, we use depth completion to convert these sparse points into dense depth maps and uncertainty estimates, which are used to guide NeRF optimization. |

| [video][bibtex][project page] |