| Name: | Lukas Höllein |

|---|---|

| Position: | Ph.D Candidate |

| E-Mail: | lukas.hoellein@tum.de |

| Phone: | +49 (89) 289 - 18164 |

| Room No: | 02.07.038 |

Bio

Hi, I am Lukas. I'm a PhD student at the Visual Computing & Artificial Intelligence Lab at the Technical University of Munich, supervised by Prof. Dr. Matthias Nießner. For more information, please visit my personal website.Research Interest

3D reconstruction, generative AI, neural rendering, CUDAPublications

2025

| WorldExplorer: Towards Generating Fully Navigable 3D Scenes |

|---|

| Manuel-Andreas Schneider, Lukas Höllein, Matthias Nießner |

| SIGGRAPH Asia 2025 |

| WorldExplorer generates 3D scenes from a given text prompt using camera-guided video diffusion models. |

| [video][bibtex][project page] |

| IntrinsiX: High-Quality PBR Generation using Image Priors |

|---|

| Peter Kocsis, Lukas Höllein, Matthias Nießner |

| NeurIPS 2025 |

| Recent generative models directly create shaded images, without any explicit material and shading representation. From text input, we generate renderable PBR maps. We first train separate LoRA modules for the intrinsic properties of albedo, rough/metal, normal. Then, we introduce cross-intrinsic attention using a rerendering loss with importance-weighted light sampling to enable coherent PBR generation. Next to editable image generation, our predictions can be distilled into room-scale scenes using SDS for large-scale PBR texture generation. Our method outperforms text -> image -> PBR methods both in generalization and quality, since directly generating PBR maps does not suffer from the inherent ambiguity of intrinsic image decomposition. In addition, our design choice facilitates SDS-based PBR texture distillation. |

| [video][bibtex][project page] |

| QuickSplat: Fast 3D Surface Reconstruction via Learned Gaussian Initialization |

|---|

| Yueh-Cheng Liu, Lukas Höllein, Matthias Nießner, Angela Dai |

| ICCV 2025 |

| QuickSplat learns data-driven priors to generate dense initializations for 2D gaussian splatting optimization of large-scale indoor scenes. This provides a strong starting point for the reconstruction, which accelerates the convergence of the optimization and improves the geometry of flat wall structures. It further learns to jointly estimate the densification and update of the scene parameters iteratively, accelerating runtime and reducing depth errors. |

| [video][bibtex][project page] |

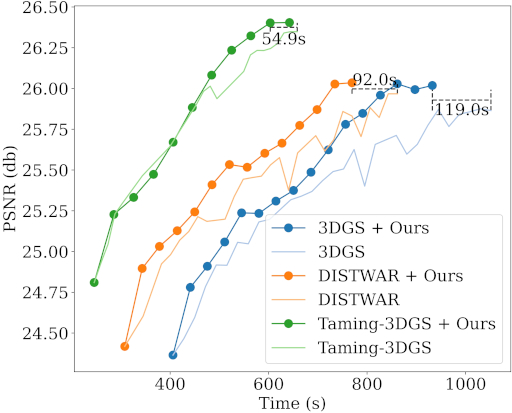

| 3DGS-LM: Faster Gaussian-Splatting Optimization with Levenberg-Marquardt |

|---|

| Lukas Höllein, Aljaž Božič, Michael Zollhöfer, Matthias Nießner |

| ICCV 2025 |

| 3DGS-LM accelerates Gaussian-Splatting optimization by replacing the ADAM optimizer with Levenberg-Marquardt. We propose a highly-efficient GPU parallization scheme for PCG and implement it in custom CUDA kernels that compute Jacobian-vector products. |

| [video][bibtex][project page] |

2024

| ViewDiff: 3D-Consistent Image Generation with Text-to-Image Models |

|---|

| Lukas Höllein, Aljaž Božič, Norman Müller, David Novotny, Hung-Yu Tseng, Christian Richardt, Michael Zollhöfer, Matthias Nießner |

| CVPR 2024 |

| ViewDiff generates high-quality, multi-view consistent images of a real-world 3D object in authentic surroundings. We turn pretrained text-to-image model into 3D consistent image generator by finetuning them with multi-view supervision. |

| [video][bibtex][project page] |

2023

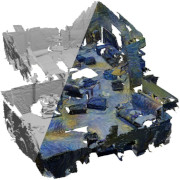

| Text2Room: Extracting Textured 3D Meshes from 2D Text-to-Image Models |

|---|

| Lukas Höllein, Ang Cao, Andrew Owens, Justin Johnson, Matthias Nießner |

| ICCV 2023 |

| Text2Room generates textured 3D meshes from a given text prompt using 2D text-to-image models. The core idea of our approach is a tailored viewpoint selection such that the content of each image can be fused into a seamless, textured 3D mesh. More specifically, we propose a continuous alignment strategy that iteratively fuses scene frames with the existing geometry. |

| [video][bibtex][project page] |

2022

| StyleMesh: Style Transfer for Indoor 3D Scene Reconstructions |

|---|

| Lukas Höllein, Justin Johnson, Matthias Nießner |

| CVPR 2022 |

| We apply style transfer on mesh reconstructions of indoor scenes. We optimize an explicit texture for the reconstructed mesh of a scene and stylize it jointly from all available input images. Our depth- and angle-aware optimization leverages surface normal and depth data of the underlying mesh to create a uniform and consistent stylization for the whole scene. |

| [video][bibtex][project page] |