| Name: | Simon Giebenhain |

|---|---|

| Position: | Ph.D Candidate |

| E-Mail: | simon.giebenhain@tum.de |

| Phone: | TBD |

| Room No: | 02.07.035 |

Bio

Hi, I’m Simon. My main research interests are neural scene representations, neural rendering and geomeric deep learning. Prior to joining the Visual Computing Lab as a PhD. student under the supervision of Prof. Matthias Nießner, I completed my Bachelors and Masters in computer science at the University of Konstanz in the Computer Vision and Image Analysis Group of Prof. Bastian Goldlücke. During my Bachelors degree I visited the University of Toronto for two semesters as an exchange student. Homepage

Research Interest

3D Reconstruction, Neural Fields, Neural Rendering, Generative Modeling, Geometric Deep LearningPublications

2026

| Pixel3DMM: Versatile Screen-Space Priors for Single-Image 3D Face Reconstruction |

|---|

| Simon Giebenhain, Tobias Kirschstein, Martin Rünz, Lourdes Agapito, Matthias Nießner |

| ICLR 2026 |

| Pixel3DMM is a state-of-the art FLAME tracker using face-specific domain expert ViT predictions for pixel-aligned geometric cues, i.e. uv-coordinartes and surface normals. The combination of DINOv2 backbone and large-scale 3D face dataset processing result in extremly robust tracking on in-the-wild data. |

| [video][bibtex][project page] |

2025

| BecomingLit: Relightable Gaussian Avatars with Hybrid Neural Shading |

|---|

| Jonathan Schmidt, Simon Giebenhain, Matthias Nießner |

| NeurIPS 2025 |

| BecomingLit reconstructs intrinsically decomposed head avatars based on 3D Gaussian primitives for real-time relighting and animation. To train our avatars, we introduce a novel multi-view light stage dataset. |

| [video][bibtex][project page] |

| HeadCraft: Modeling High-Detail Shape Variations for Animated 3DMMs |

|---|

| Artem Sevastopolsky, Philip Grassal, Simon Giebenhain, ShahRukh Athar, Luisa Verdoliva, Matthias Nießner |

| 3DV 2025 |

| We learn to generate large displacements for parametric head models, such as long hair, with high level of detail. The displacements can be added to an arbitrary head for animation and semantic editing. |

| [code][bibtex][project page] |

2024

| GGHead: Fast and Generalizable 3D Gaussian Heads |

|---|

| Tobias Kirschstein, Simon Giebenhain, Jiapeng Tang, Markos Georgopoulos, Matthias Nießner |

| SIGGRAPH Asia 2024 |

| GGHead generates photo-realistic 3D heads and renders them at 1k resolution in real-time. Thanks to the efficiency of 3D Gaussian Splatting, no 2D super-resolution network is needed anymore which hampered the view-consistency of prior work. We adopt a 3D GAN formulation which allows training GGHead solely from 2D image datasets. |

| [video][code][bibtex][project page] |

| NPGA: Neural Parametric Gaussian Avatars |

|---|

| Simon Giebenhain, Tobias Kirschstein, Martin Rünz, Lourdes Agapito, Matthias Nießner |

| SIGGRAPH Asia 2024 |

| NPGA is a method to create 3d avatars from multi-view video recordings which can be precisely animated using the expression space of the underlying neural parametric head model. For increased dynamic representational capacity, we leaverage per-Gaussian latent features, which are used to condition our deformation MLP. |

| [video][bibtex][project page] |

| DiffusionAvatars: Deferred Diffusion for High-fidelity 3D Head Avatars |

|---|

| Tobias Kirschstein, Simon Giebenhain, Matthias Nießner |

| CVPR 2024 |

| DiffusionAvatar uses diffusion-based, deferred neural rendering to translate geometric cues from an underlying neural parametric head model (NPHM) to photo-realistic renderings. The underlying NPHM provides accurate control over facial expressions, while the deferred neural rendering leverages the 2D prior of StableDiffusion, in order to generate compelling images. |

| [video][code][bibtex][project page] |

| MonoNPHM: Dynamic Head Reconstruction from Monoculuar Videos |

|---|

| Simon Giebenhain, Tobias Kirschstein, Markos Georgopoulos, Martin Rünz, Lourdes Agapito, Matthias Nießner |

| CVPR 2024 |

| MonoNPHM is a neural parametric head model that disentangles geomery, appearance and facial expression into three separate latent spaces. Using MonoNPHM as a prior, we tackle the task of dynamic 3D head reconstruction from monocular RGB videos, using inverse, SDF-based, volumetric rendering. |

| [video][bibtex][project page] |

| GaussianAvatars: Photorealistic Head Avatars with Rigged 3D Gaussians |

|---|

| Shenhan Qian, Tobias Kirschstein, Liam Schoneveld, Davide Davoli, Simon Giebenhain, Matthias Nießner |

| CVPR 2024 |

| We introduce GaussianAvatars, a new method to create photorealistic head avatars that are fully controllable in terms of expression, pose, and viewpoint. The core idea is a dynamic 3D representation based on 3D Gaussian splats that are rigged to a parametric morphable face model. This combination facilitates photorealistic rendering while allowing for precise animation control via the underlying parametric model. |

| [video][bibtex][project page] |

2023

| NeRSemble: Multi-view Radiance Field Reconstruction of Human Heads |

|---|

| Tobias Kirschstein, Shenhan Qian, Simon Giebenhain, Tim Walter, Matthias Nießner |

| SIGGRAPH'23 |

| We propose NeRSemble for high-quality novel view synthesis of human heads. We combine a deformation field modeling coarse motion with an ensemble of multi-resolution hash encodings to represent fine expression-dependent details. To train our model, we recorded a novel multi-view video dataset containing over 4700 sequences of human heads covering a variety of facial expressions. |

| [video][code][bibtex][project page] |

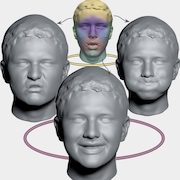

| Learning Neural Parametric Head Models |

|---|

| Simon Giebenhain, Tobias Kirschstein, Markos Georgopoulos, Martin Rünz, Lourdes Agapito, Matthias Nießner |

| CVPR 2023 |

| We present Neural Parametric Head Models (NPHMs) for high fidelity representation of complete human heads. We utilize a hybrid representation for a person's canonical head geometry, which is deformed using a deformation field to model expressions. To train our model we captured a large dataset of high-end laser scans of 120 persons in 20 expressions each. |

| [video][bibtex][project page] |