| Name: | Yinyu Nie |

|---|---|

| Position: | Post Doc |

| E-Mail: | yinyu.nie@tum.de |

| Phone: | +49(89)289-17888 |

| Room No: | 02.07.039 |

Bio

I am working as a postdoctoral researcher at the Visual Computing Lab, Technical University of Munich (TUM) from 2021. Previous, I did my Ph.D. research on content-aware 3D scene understanding at the National Centre for Computer Animation, Bournemouth University from 2017 to 2020. During my Ph.D., I have visited the Chinese University of Hong Kong (Shenzhen) and Shenzhen Research Institute of Big Data from 2019 to 2020 as a visiting researcher. My research interests lie in 3D vision, and focus at 3D scene understanding, shape analysis and reconstruction. For more details, please check my personal page: https://yinyunie.github.io/Publications

2024

| Mesh2NeRF: Direct Mesh Supervision for Neural Radiance Field Representation and Generation |

|---|

| Yujin Chen, Yinyu Nie, Benjamin Ummenhofer, Reiner Birkl, Michael Paulitsch, Matthias Müller, Matthias Nießner |

| ECCV 2024 |

| Mesh2NeRF is a method for extracting ground truth radiance fields directly from 3D textured meshes by incorporating mesh geometry, texture, and environment lighting information. Mesh2NeRF serves as direct 3D supervision for neural radiance fields, leveraging mesh data for improving novel view synthesis performance. Mesh2NeRF can function as supervision for generative models during training on mesh collections. |

| [video][bibtex][project page] |

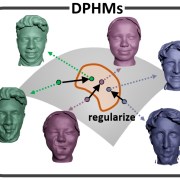

| DPHMs: Diffusion Parametric Head Models for Depth-based Tracking |

|---|

| Jiapeng Tang, Angela Dai, Yinyu Nie, Lev Markhasin, Justus Thies, Matthias Nießner |

| CVPR 2024 |

| We introduce Diffusion Parametric Head Models (DPHMs), a generative model that enables robust volumetric head reconstruction and tracking from monocular depth sequences. Tracking and reconstructing heads from real-world single-view depth sequences is very challenging, as the fitting to partial and noisy observations is underconstrained. To tackle these challenges, we propose a latent diffusion-based prior to regularize volumetric head reconstruction and tracking. This prior-based regularizer effectively constrains the identity and expression codes to lie on the underlying latent manifold which represents plausible head shapes. |

| [video][code][bibtex][project page] |

| DiffuScene: Denoising Diffusion Models for Generative Indoor Scene Synthesis |

|---|

| Jiapeng Tang, Yinyu Nie, Lev Markhasin, Angela Dai, Justus Thies, Matthias Nießner |

| CVPR 2024 |

| We present DiffuScene for indoor 3D scene synthesis based on a novel scene configuration denoising diffusion model. It generates 3D instance properties stored in an unordered object set and retrieves the most similar geometry for each object configuration, which is characterized as a concatenation of different attributes, including location, size, orientation, semantics, and geometry features. We introduce a diffusion network to synthesize a collection of 3D indoor objects by denoising a set of unordered object attributes. |

| [video][code][bibtex][project page] |

2023

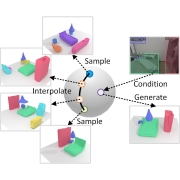

| Learning 3D Scene Priors with 2D Supervision |

|---|

| Yinyu Nie, Angela Dai, Xiaoguang Han, Matthias Nießner |

| CVPR 2023 |

| We learn 3D scene priors with 2D supervision. We model a latent hypersphere surface to represent a manifold of 3D scenes, characterizing the semantic and geometric distribution of objects in 3D scenes. This supports many downstream applications, including scene synthesis, interpolation and single-view reconstruction. |

| [video][bibtex][project page] |

2022

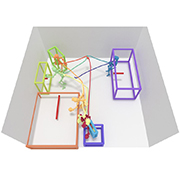

| Pose2Room: Understanding 3D Scenes from Human Activities |

|---|

| Yinyu Nie, Angela Dai, Xiaoguang Han, Matthias Nießner |

| ECCV 2022 |

| From an observed pose trajectory of a person performing daily activities in an indoor scene, we learn to estimate likely object configurations of the scene underlying these interactions, as set of object class labels and oriented 3D bounding boxes. By sampling from our probabilistic decoder, we synthesize multiple plausible object arrangements. |

| [video][bibtex][project page] |

2021

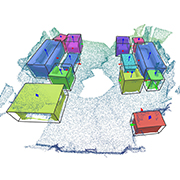

| RfD-Net: Point Scene Understanding by Semantic Instance Reconstruction |

|---|

| Yinyu Nie, Ji Hou, Xiaoguang Han, Matthias Nießner |

| CVPR 2021 |

| We introduce RfD-Net that jointly detects and reconstructs dense object surfaces from raw point clouds. It leverages the sparsity of point cloud data and focuses on predicting shapes that are recognized with high objectness. It not only eases the difficulty of learning 2-D manifold surfaces from sparse 3D space, the point clouds in each object proposal convey shape details that support implicit function learning to reconstruct any high-resolution surfaces. |

| [video][code][bibtex][project page] |