Multiframe Scene Flow with Piecewise Rigid Motion

1Max Planck Institute for Informatics 2NVIDIA 3Technical University of Munich 4DFKI

Proceedings of the International Conference on 3D Vision 2017 (3DV)

Abstract

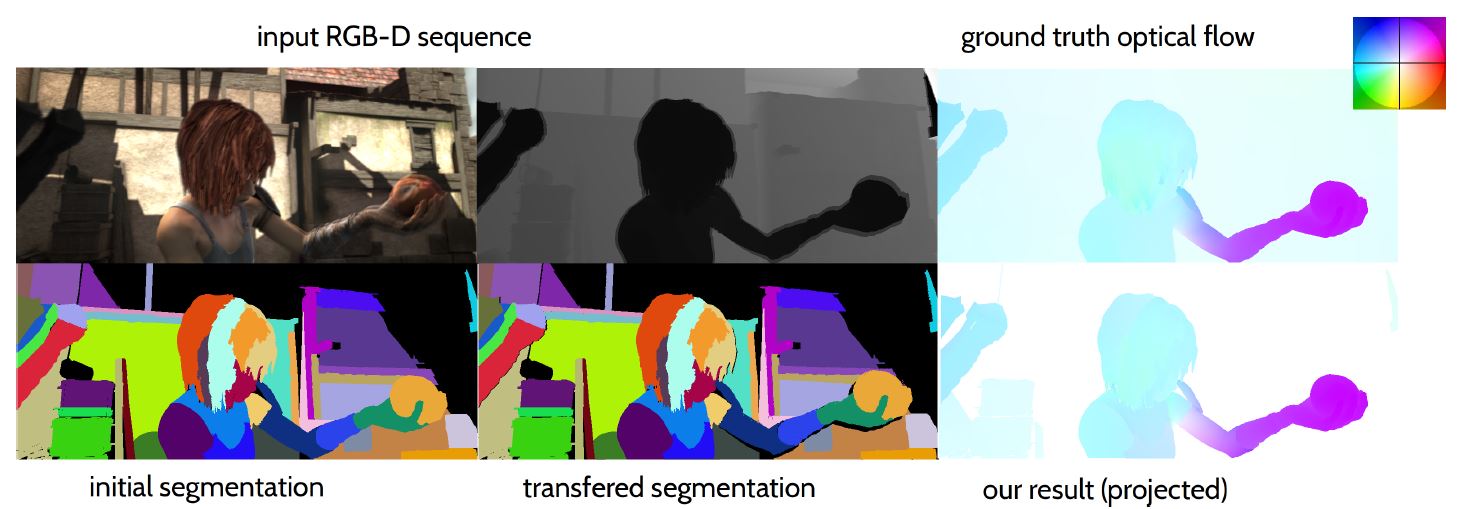

We introduce a novel multi-frame scene flow approach that jointly optimizes the consistency of the patch appearances and their local rigid motions from RGB-D image sequences. In contrast to the competing methods, we take advantage of an oversegmentation of the reference frame and robust optimization techniques. We formulate scene flow recovery as a global non-linear least squares problem which is iteratively solved by a damped Gauss-Newton approach. As a result, we obtain a qualitatively new level of accuracy in RGB-D based scene flow which can potentially run in real-time. Our method can handle challenging cases with rigid, piece-wise rigid, articulated and moderate nonrigid motion, and neither requires training data nor relies on prior knowledge about the types of the motion and deformations. Extensive experiments on synthetic and real data show that our method outperforms state-of-the-art.

Bibtex

Bibtex